З Рiздвом Христовим − Merry Christmas − Frohe Weihnachten

Available for new Projects 2026

I am available for new projects starting 2026.

I can support you for SW-architecture, SW-development, Linux, databases and security.

See IT Sky Consulting to see for more details on what I can offer.

Available for new Projects

I am available for new projects starting in 2026.

I can support you for SW-architecture, SW-development, Linux, databases and security.

See IT Sky Consulting to see for more details on what I can offer.

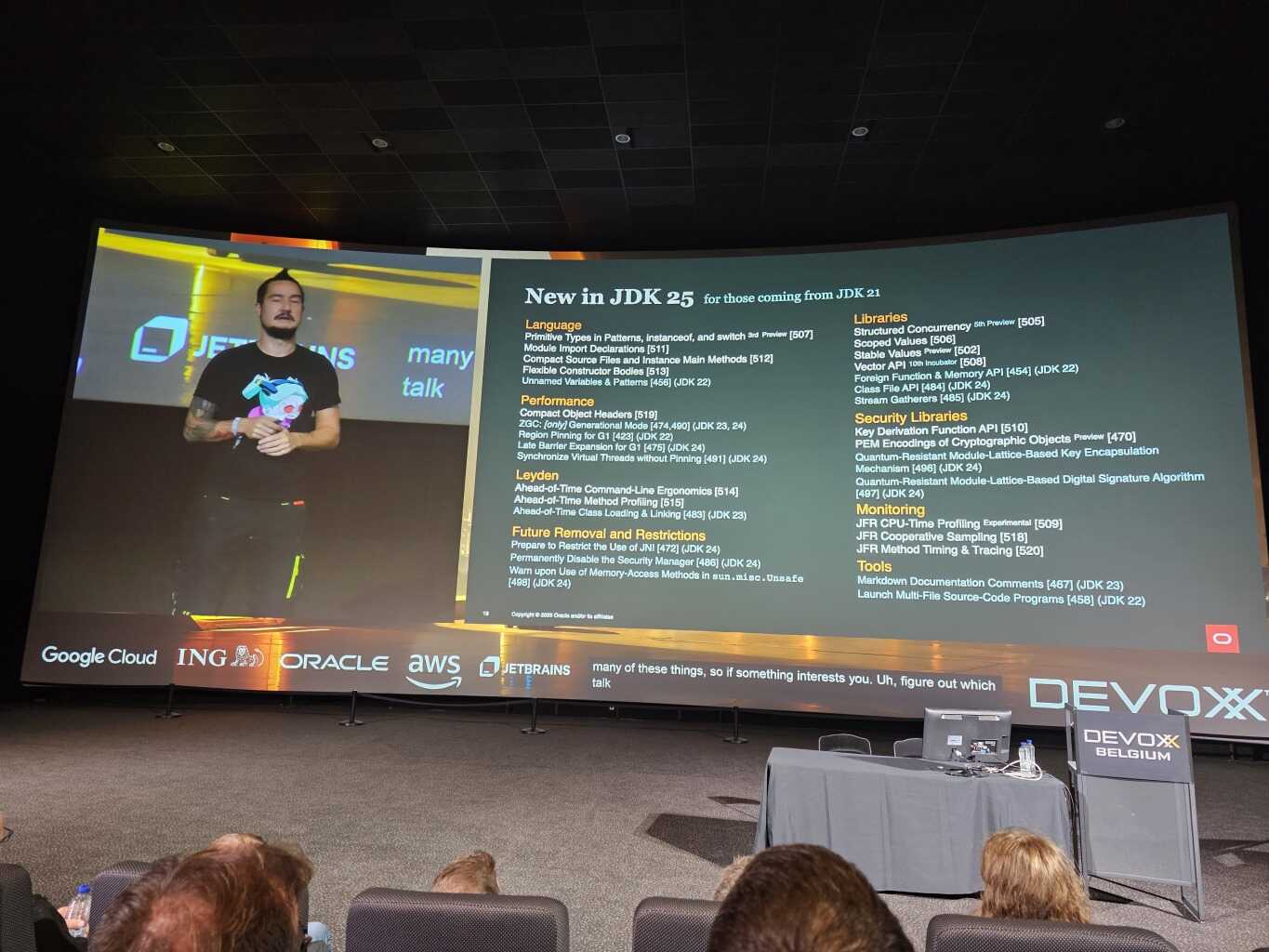

Devoxx 2025

In 2025 I visited the conference Devoxx in Antwerp from 2025-10-06 to 2025-10-10. As always, the first two days were „deep dive“, with longer talks and fewer participants, followed by two intense days with many talks, mostly about 50 min long, followed by the Friday with only 3 talks and much fewer participants. A lot of people see to skip the last day. I used to think it was because of Belgian beer, because there were some evening events with beer on Thursday, but I think it is just the desire to go home for the weekend. I recommend staying in Belgium for the weekend after the conference, at least once or twice.

Once again AI was an important topic during the whole conference. The euphoria of the previous years was still present and the organizer Stephan had done a lot of great development for the Devoxx-Web-Application using AI. Also the intro movie before the talks was great and this would not have been possible without AI.

Here are some photos of my impressions of the Devoxx:

Some Conference Talks

Here I have aggregated some conference talks that I have held in the past:

- reClojure London 2019:

Clojure Art - Devoxx Antwerp 2016: Why computers calculate wrong

- ScalaUA Kyiv 2019: Why Computers Calculate Wrong

- ScalaUA Kyiv 2018: The unexpected difficulties when moving to Scala

- ScalaUA Kyiv 2019: Know your Data

- Alpine Perl Workshop 2016: Why computers calculate wrong (with Perl 5 and Perl 6)

- ScalaUA Kyiv 2018: SQL or NoSQL: What DB-Technology to use for Scala project

- ScalaUA Kyiv 2017: Lightning Talk Rounding

- Swiss Perl Workshop 2017: Three great ways to use Perl and Java together

- ScalaUA Kyiv 2017: Some thoughts about immutability exemplified by sorting large amounts of data

Python

Python is actually a few years older than Java, but in the last couple of years it has been tremendously successful and it is considered „modern“ today.

Python comes in two major versions Python 2 and Python 3. It took more than a decade for Python 3 to surpass Python 2, but it looks like that has finally happend a few years ago. The Python community was fortunately strong enough to support both versions at a high level for such a long time. But, please, use Python 3, if you can, preferrably a recent version.

Actually I recommend learning Python, because it is good to know it in this time. I would not call it a favorite language. To my impression Ruby has similar capabilities, but is a much more beautiful language. Perl is still a bit easier for „small tasks“, especially one liners using regular expressions. Raku tried to be a better replacement for Perl and has some great concepts, but didn’t really pick up momentum. Clojure is a phantastic language, but it forces two challenges on the ones who try to learn it. You need to embrace the Lisp-Syntax and the functional paradigm at once.

Then again there are statically typed languages, like Rust, Java, Kotlin, Scala, C#, F# or Swift, that tend to eliminate some errors at compile time and and that tend run faster, especially in conjunction with full native compilation like using llvm or Graal VM.

I do not like the syntax with „significant whitespace“, but since I use indention to emphasize structure anyway, I would not call that a show stopper.

So what is cool about Python?

I think that it is relatively easy to learn for someone who already knows several programming languages. It is a multi paradigm language which support object orientented programming, procedural programming and functional programming and it follows pretty much common sense of what can be find in other programming languages.

The most important advantage comes from the fact that Python is heavily used in certain areas and that there are a lot of libraries available, especially in these areas. It has actually bein among the most popular languages on Tiobe Index and it is actually Number 1 today (2025-04-10) and has been „number 1“ or at least „almost number 1“ since 2021.

I see the following areas:

- Scripting language for system engineering and related tasks (competing and replacing Perl and Ruby and to a minor extend Bash)

- Artificial Intelligence

- Data Science

- Scientific Computing

- Usage as embedded language

- Google is known to heavily rely on Python (among other languages)

- Many other organizations list Python among the „recommended programming languages“, but do not recommend using Perl or Ruby

- and of course a lot of programming as a general purpose language

In the end of the day the results that can be achieved count and a lot of cool software has been done in Python.

I will use Python in the future and explore where it can be useful.

New Input Device

We have seen keyboards, mouses, touchscreens, touchpads, voice recognition and even foot pedals as inpunt devices.

But apparently this is all outdated technology. The newest generation of computers will come with some kind of rubber balloon which is squeezed in different ways. It has been proven that this can replace both keyboard and mouse and that after some practice it is much faster than a keyboard. The nice thing is that it also generates its own energy and does not need to be charged or connected with a cable.

The newest Gartner reports suggest that IT departments will save billions by applying this technology and bypassing the bottleneck keyboard+mouse.

2025

Christmas 2024

Split GPX file

GPX-files are used to store planned and done routes, for example for cycling.

A lot of web sites and applications allow creating and editing such routes.

In practice it matters for a trip from A to B if there is one long route that covers the whole distance or many shorter routes. Long routes get hard to handle, because of the software gets slow or crashes when the data set is too big. And small routes are more difficult to use when wanting to have an overview and they require switching to another route during the day, which can be inconvenient.

In theory it is possible to just copy a long route and to delete parts of it to obtain shorter routes, but that does not work too well, if the route is already very long.

So one way to go is to make a long route with only rough planning, to have an overview and then split it into shorter routes that can then be modified to obtain a more accurate planning.

So the following program (written in Perl for Linux) will split a GPX file into n about equally long parts. It is quite simple, but I hope it will help to make this task a little bit easier.

License is GPL v3.

#!/usr/bin/perl -w

# (C) Karl Brodowsky 2024-06-09

# Licence GPS v3.0

# (see https://www.gnu.org/licenses/gpl-3.0.de.html )

use strict;

use utf8;

use Encode qw(decode encode);

binmode STDOUT, ":utf8";

binmode STDIN, ":utf8";

my $header =<<'EOH';

<?xml version='1.0' encoding='UTF-8'?>

<gpx version="1.1" creator="https://www.komoot.de" xmlns="http://www.topografix.com/GPX/1/1" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.topografix.com/GPX/1/1 http://www.topografix.com/GPX/1/1/gpx.xsd">

<metadata>

<name><<TITLE>></name>

<author>

<link href="https://www.komoot.de">

<text>komoot</text>

<type>text/html</type>

</link>

</author>

</metadata>

<trk>

<name><<TITLE>></name>

<trkseg>

EOH

my $footer =<<'EOF';

</trkseg>

</trk>

</gpx>

EOF

sub usage($) {

my $msg = $_[0];

if ($msg) {

chomp $msg;

print $msg, "\n\n";

}

print <<"EOU";

USAGE

$0 <<FILE>> <<NAME>> <<NUMBER_OF_PARTS>>

EOU

if ($msg) {

exit 1;

} else {

exit 0;

}

}

if (scalar(@ARGV) == 0 || $ARGV[0] =~ m/^-{0,2}help/) {

usage("");

}

if (scalar(@ARGV) != 3) {

usage("wrong number of arguments");

}

my $file = $ARGV[0];

my $file_u = decode("utf-8", $file);

unless (-f $file && -r $file) {

usage("$file_u is not a readable file");

}

unless ($file =~ m/.+\.gpx$/) {

usage("$file_u is not a gpx-file");

}

my $name = decode("utf-8", $ARGV[1]);

$name =~ s/^\s+//;

$name =~ s/\s+$//;

$name =~ s/\r//g;

unless (length($name) > 0) {

usage("$name must be at least 1 character");

}

if ($name =~ m/[<>"']/) {

usage("$name contains illegal characters");

}

print "name=$name\n";

my $number_of_parts = $ARGV[2];

if ($number_of_parts < 2) {

usage("at least 2 parts are required");

}

open INPUT, "<:utf8", $file || usage("cannot open $file_u");

my @points = ();

my $point = "";

while (<INPUT>) {

if (m/^\s*<trkpt/) {

$point = $_;

} elsif (m/^\s*<(ele|time)>/) {

$point .= $_;

} elsif (m/^\s*<\/trkpt>/) {

$point .= $_;

push @points, $point;

$point = "";

} else {

print;

}

}

close INPUT;

print "\n";

my $length = scalar(@points);

sub part_length($$) {

my ($remaining_length, $remaining_number_of_parts) = @_;

($remaining_length - 1) / $remaining_number_of_parts + 1;

}

my $part_length = part_length($length, $number_of_parts);

print "length = $length part_length = $part_length number_of_parts = $number_of_parts\n";

if ($part_length < 2) {

usage("too many parts part_length=$part_length");

}

my $remaining_length;

my $remaining_number_of_parts = $number_of_parts;

my $i = 0;

while ($remaining_number_of_parts > 0) {

$remaining_length=scalar(@points);

$part_length = int(part_length($remaining_length, $remaining_number_of_parts) + 0.5);

my $header_i = $header;

my $title_i = sprintf("%s (%d)", $name, $i);

$header_i =~ s/<<TITLE>>/$title_i/g;

my $file_i = $file;

my $file_u_i = decode("utf-8", $file);

$file_i =~ s/\.gpx$/_$i.gpx/;

print "i=$i remaining_number_of_parts=$remaining_number_of_parts remaining_length=$remaining_length\n";

print "i=$i file_i=$file_u_i title_i=$title_i\n";

open OUTPUT, ">:utf8", $file_i;

print OUTPUT $header_i;

for (my $j = 0; $j < $part_length; $j++) {

my $point = $points[0];

if ($j < $part_length -1) {

shift @points;

}

print OUTPUT $point;

}

print OUTPUT $footer;

close OUTPUT;

$i++;

$remaining_number_of_parts--;

}

P.S. I plan to return to regular posts in this blog during this year.