The goal of spline approximation has already been explained in the previous article „Spline Approximation (Introduction)„.

This article will cover the mathematics behind this approximation and develop an approach. If you do not care about the mathematics, just skip this article and read the Spline Approximation (Cookbook)“, that will come soon.

Spline Interpolation

We have points

(0.1)

and want a function f such that

and f is continuous. Usually the requirement goes further, so the first and second derivative should also be continuous too.

The way this is accomplished is by defining cubic polynomials on each interval

such that

Now this gives us unknowns and together with the initial condition

equations. So this is underdetermined, which is usually resolved by adding two more or less arbitrary conditions. A lot of material can be found about this in the internet, in papers and in books.

Spline Approximation

Now a more interesting case is that we actually have much more given points than spline intervals. So we have interval borders at points

and we have given pairs

with much larger than

. The exact condition will become clear later, but for the time being it should be assumed, that

is meant to be much larger than

. The values

may contain duplicates, but in that case the number of different values for

should also be much larger than

.

Btw. this is nothing new. Papers about this topic exist, but it is not as commonly found on the internet as the interpolation.

From here onwards, it is assumed, that the intervals all have the same length, i.e. there is some positive real number such that

for all

.

So we want the conditions (2) and (3) to be fullfilled and a weaker condition

and we want to be „somewhat close“ to

for all points

. More precisely it should be as close as possible on „average“, where the quadratic mean is used as „average“. That is common practice, allows for smooth formulas and works. To just minimize the quadratic mean, taking the square root and dividing by

can be ommitted. So this can be made explicit by requiring the sum of the squares of the differences to be minimal i.e.

Btw. this can be done perfectly well with complex valued functions, we just need to replace the squares by the squares of the absolute values. So on the „-side“ we can have complex numbers. Allowing complex numbers on the „

-side“ is a bit more involved, because being differentiable twice implies that the function would be holomorphic, so combining different functions is impossible. And even complex valued functions would become non continuous at the glue lines if we simply apply them to the whole complex plain. So, for the time being, real numbers are assumed.

Now the valid spline functions on the given set of intervals obviously form a vector space. Conditions (1a), (2) and (3) remain valid when we multiply by a constant or add two such functions. Having parameters and

independent conditions, its dimension should be

. This can be proved by induction. For

any cubic polynomial (of degree

) can be used. These form a 4-dimensional vectorspace. Assuming that for

subintervals the valid spline functions form a vector space of dimension

, then for

subintervals the additional subinterval

is added. In this subinterval, the function can be expressed as

Conditions (1a), (2) and (3) already fix the values for ,

and

, while

can be choosen freely. Thus the dimension is exactly one higher and the assumption is proved.

Now a basis for this vector space should be found. Ideally functions that are only non-zero in a small range, because they are easier to handle and easier to calculate.

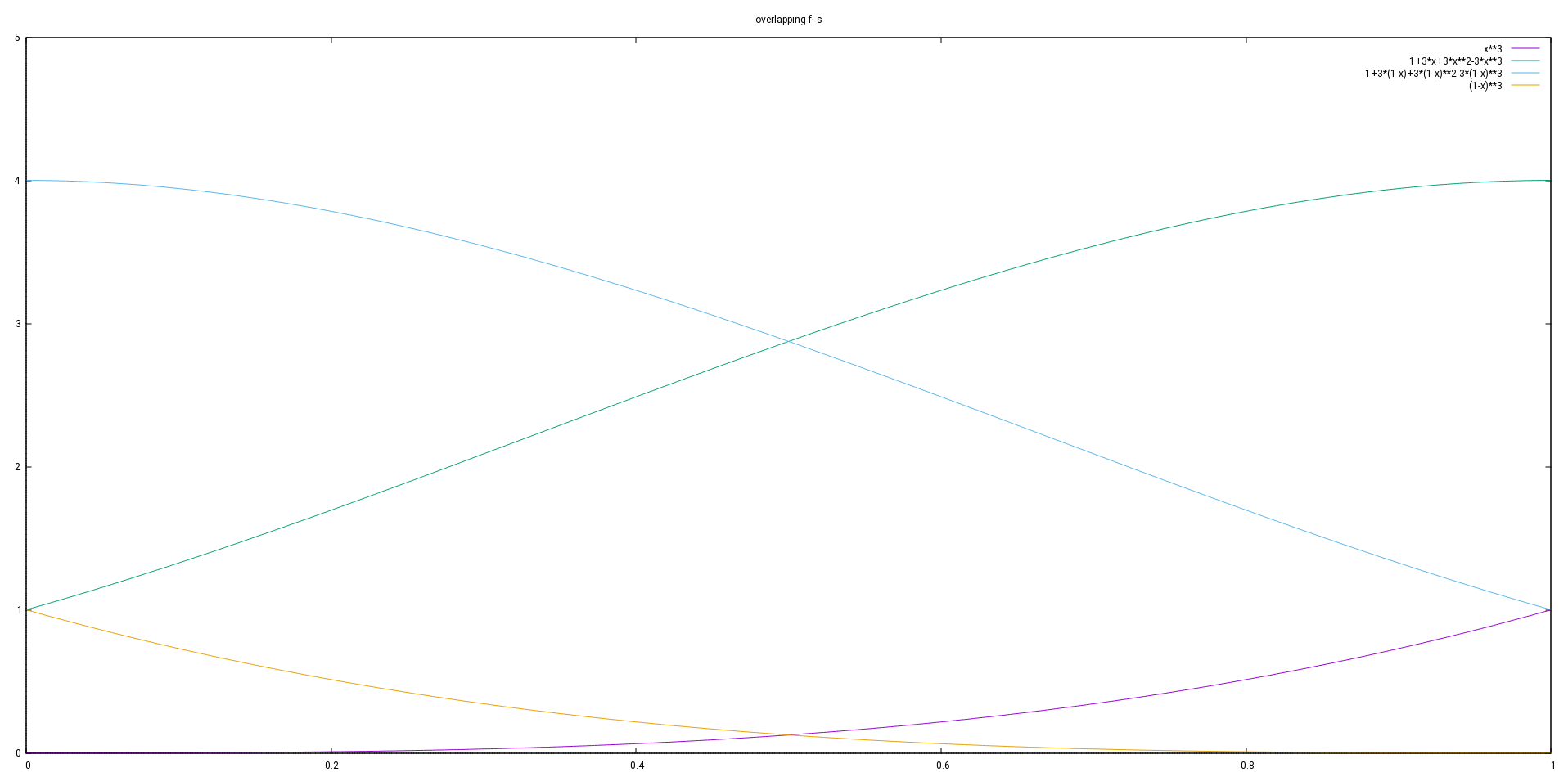

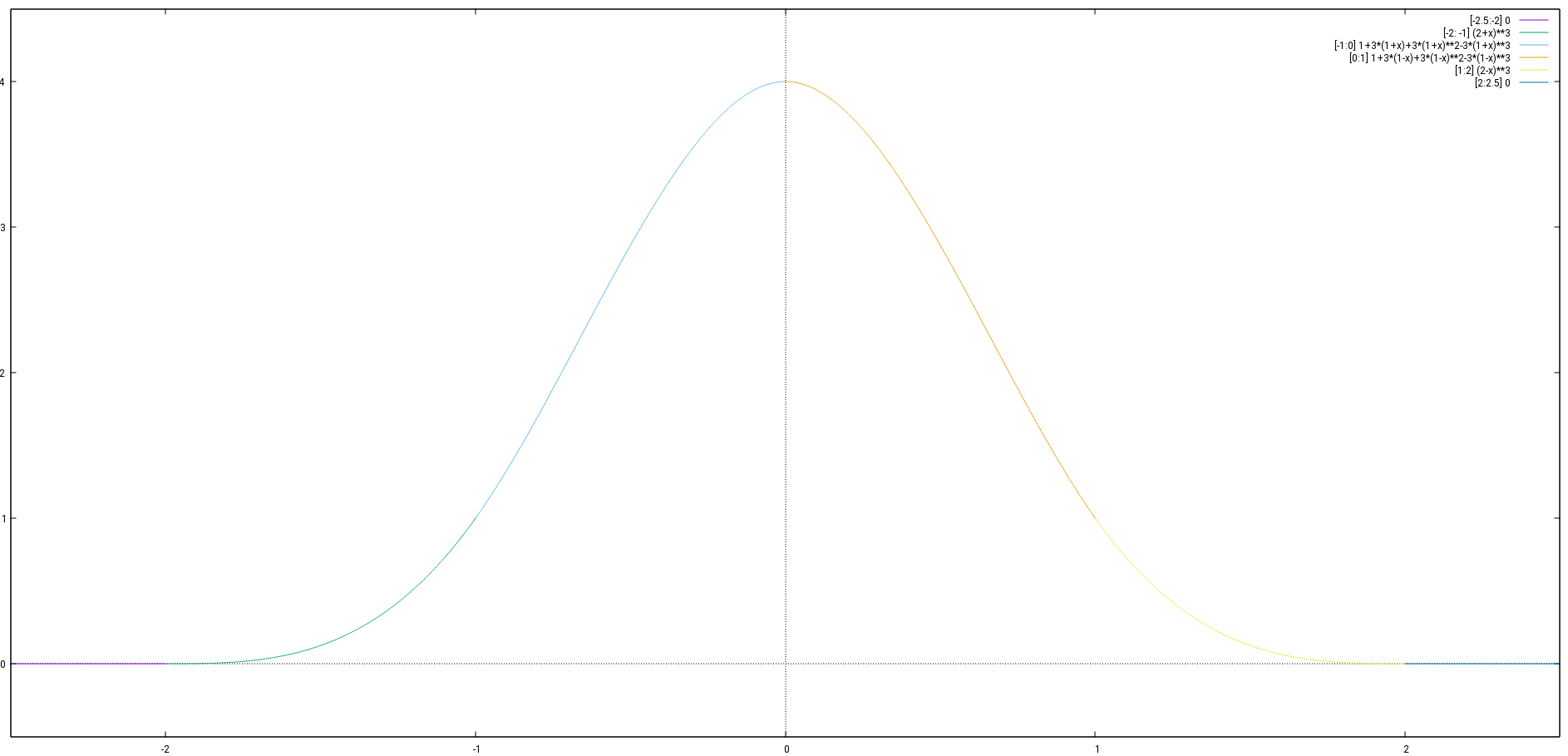

This can be accomplished by a function that looks like this:

Note that this is not the Gaussian function curve, which never actually becomes zero. The function we are looking for should actually be 0 outside of a given range. So assuming it is for

and

for

for some constant

. This implies that the first and second derivative are

for

. So in the subinterval starting at

it needs to be a cubic polynomial of the form

. So further subintervals are needed to return to

. For reasons of symmetry there should be a subinterval ending at

in which the function takes the form

. Using a third subinterval

for the whole middle part would imply that this has to be an even function, thus of the form

.

could be determined as

. According to the first derivative condition we would have

, thus

. According to the second derivative condition we would have

thus

thus

Since subintervals of equal length are required, this is not adequate.

Using a total of four subintervals actually works. In this case for the subinterval four conditions are given to determine the four coefficients of the cubic function.

For readability it will be assumed that and

, so the subintervals are

. The function can be choosen as

Now

needs to be defined in [-1, 0] such that

Thus ,

,

and

Thus .

So the prototype function is

A base for this vector space can be found using functions for

. For readability purposes we define

even for negative and

.

The functions are defined such that such that

These functions fulfill conditions (1a), (2) and (3), because they inherit that from .

By induction it can be proved that they are linear independent. It is true for alone. If it is true for

it is also true for

, because

and

Since

contains exactly elements, it is a vector space basis.

That means that we are searching for a function

such that the minimality condition (4) holds.

This is accomplished by filling (6) into (4) and calculating the partial derivatives with respect to each :

Thus

So it comes down to solving the linear equation system

This can be solved using a variant of the Gaussian elimination algorithm. Since this is a numerical problem, it is important to deal with the issue of rounding. Generally it is recommended choosing the pivot element wisely.

In this case the approach is chosen to iterate through the columns. For each column the line is chosen, in which the element in that column has the largest absolute value relative to the cubic mean of the absolute values of the other entries in the line.

When actually using the spline function a lot, it is probably a good idea to consolidate the linear combinations of different s within each subinterval into a cubic polynomial of the form

This can be based on the starting point of the interval or the end point or some point in the middle, probably the arithmetic mean of the interval borders. These choices of A have some advantages, because it makes the terms that need to be added smaller in terms of absolute value. Since the accurate end result is anyway the same, this helps avoiding rounding errors, that can go terribly wrong when adding (or subtracting) terms with large absolute values where the result is much smaller than the terms. So the arithmetic mean of the subinterval borders might be the best choice.

The actual formulas and a program will be added in one or two articles in the near future.

Schreibe einen Kommentar