Selfwritten Util Libraries

Today we have really good libraries with our programming languages and they cover a lot of things. The funny thing is, that we usually end up writing some Util-classes like StringUtil, CollectionUtil, NumberUtil etc. that cover some common tasks that are not found in the libraries that we use. Usually it is no big deal and the methods are trivial to write. But then again, not having them in the library results in several slightly different ad hoc solutions for the same problem, sometimes flawless, sometimes somewhat weak, that are spread throughout the code and maybe eventually some „tools“, „utils“ or „helper“ classes that unify them and cover them in a somewhat reasonable way.

Imposing Util Libraries on all Developers

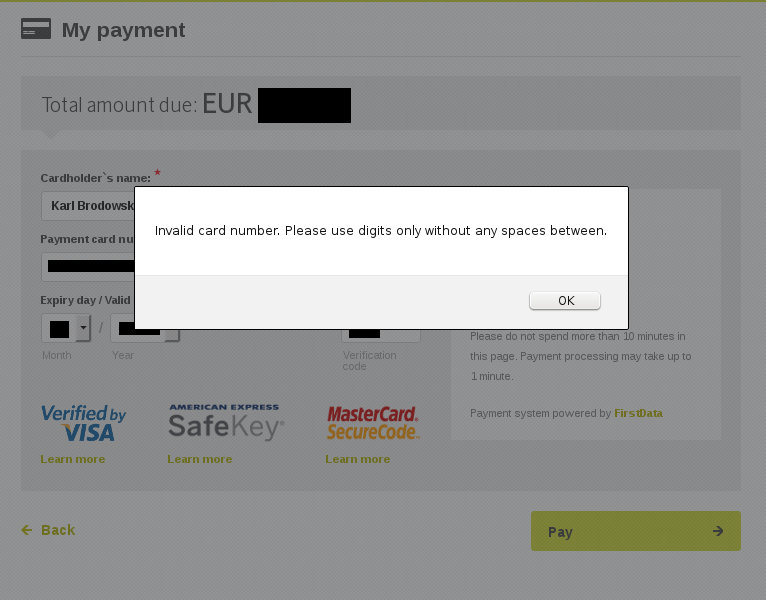

In the worst case these self written library classes really suck, but are imposed on the developers. Many years ago it was „company standard“ to use a common library for localizing strings. The concept was kind of nice, but it had its flaws. First there was a company wide database for localizing strings in order to save on translation costs, but the overhead was so much and the probability that the same short string means something different in the context of different applications was there. This could be addressed by just creating a label that somehow included the application ID and bypassing this overhead, whenever a collision was detected. What was worse, the new string made it into a header file and that caused the whole application to be recompiled, unless a hand written make file skipped this dependency. This was of course against company policy as well and it meant a lot of work. In those days compilation of the whole application took about 8 (eight!) hours. Maybe seven. So after adding one string it took 8 hours of compile time to continue working with it. Anyway, there was another implementation for the same concept for another operating system, that used hash tables and did not require recompilation. It had the risk of runtime errors because of non-defined strings, but it was at least reasonable to work with it. I ported this library to the operating system that I was using and used it and during each meeting I had do commit to the long term goal of changing to the broken library, which of course never happened, because there were always higher priorities.

I thing the lesson we can already learn is that such libraries that are written internally and imposed on all developers should be really done very well. Senior developers should be involved and if the company does not have them, hired externally for the development. Not to do the whole development, but to help doing it right.

Need for Util libraries

So why not just go with the given libraries? Or download some more? Depending on the language there are really good libraries around. Sometimes that is the way to go. Sometimes it is good to write a good util-libarary internally. But then it is important to do it well, to include only stuff that is actually needed or reasonably likely needed and to avoid major effort for reinventing the wheel. Some obscure libraries actually become obsolete when the main default library gets improved.

Example: Trigonometric and other Mathematical Functions

Most of us do not do a lot of floating point arithmetic and subsequentially we do not need the trigonometric functions like  and

and  , other transcendental functions like

, other transcendental functions like  and

and  or functions like cube root (

or functions like cube root (![Rendered by QuickLaTeX.com \sqrt[3]{x}](https://brodowsky.it-sky.net/wp-content/ql-cache/quicklatex.com-cade19ad1ffaa736832e7865abe1953a_l3.svg) ) a lot. Where the default set of these functions ends is somewhat arbitrary, but of course we need to go to special libraries at some point for more special functions. We can look what early calculators used to have and what advanced math text books in schools cover. We have to consider the fact, that the commonly used set of trigonometric functions differs from country to country. Americans tend to use six of them,

) a lot. Where the default set of these functions ends is somewhat arbitrary, but of course we need to go to special libraries at some point for more special functions. We can look what early calculators used to have and what advanced math text books in schools cover. We have to consider the fact, that the commonly used set of trigonometric functions differs from country to country. Americans tend to use six of them,  ,

,  ,

,  ,

,  ,

,  and

and  , which is kind of beautiful, because it really completes the set. Germans tend to use only

, which is kind of beautiful, because it really completes the set. Germans tend to use only  ,

,  ,

,  and

and  , which is not as beautiful, but at least avoids the division by zero and issue of transforming

, which is not as beautiful, but at least avoids the division by zero and issue of transforming  to

to  . Calculators usually had only

. Calculators usually had only  ,

,  and

and  . But they offered them in three flavors, with modes of „DEG“, „RAD“ and „GRAD“. The third one was kind of an attempt to metricize degrees by having

. But they offered them in three flavors, with modes of „DEG“, „RAD“ and „GRAD“. The third one was kind of an attempt to metricize degrees by having  instead of

instead of  for an right angle, which seems to be a dead idea. Of course in advanced mathematics and physics the „RAD“, which uses

for an right angle, which seems to be a dead idea. Of course in advanced mathematics and physics the „RAD“, which uses  instead of

instead of  is common and that is what all programming languages that I know use, apart from the calculators. Just to explain the functions for those who are not familiar with the whole set, we can express the last four in terms of

is common and that is what all programming languages that I know use, apart from the calculators. Just to explain the functions for those who are not familiar with the whole set, we can express the last four in terms of  and

and  :

:

(tangent)

(tangent) (cotangent)

(cotangent) (secans)

(secans) (cosecans)

(cosecans)

Then we have the inverse trigonometric functions, that can be denoted with something like  or

or  for all six trigonometric functions. There is an irregularity to keep in mind. We write

for all six trigonometric functions. There is an irregularity to keep in mind. We write  instead of

instead of  for

for  , which is the multiplication of that number of

, which is the multiplication of that number of  terms. And we use

terms. And we use  to apply the function „

to apply the function „ “

“  time, which is actually the inverse function. Mathematicians have invented this irregularity and usually it is convenient, but it confuses those who do not know it. From these functions many programming languages offer only the

time, which is actually the inverse function. Mathematicians have invented this irregularity and usually it is convenient, but it confuses those who do not know it. From these functions many programming languages offer only the  assuming the others five can be created from that. This is true, but cumbersome, because it needs to differentiate a lot of cases using something like if, so there are likely to be many bugs in software doing this. Also these ad hoc implementations loose some precision.

assuming the others five can be created from that. This is true, but cumbersome, because it needs to differentiate a lot of cases using something like if, so there are likely to be many bugs in software doing this. Also these ad hoc implementations loose some precision.

It was also common to have a conversion from polar coordinates to rectangular (p2r) coordinates and vice versa (r2p), which is kind of cool and again easy, but not too trivial to do ad hoc. Something like atan2 in FORTRAN, which does the essence of the harder r2p operation, would work also, depending on hon convenient it is to deal with multiple return values. We can then do r2p using  ,

,  and p2r by

and p2r by  and

and  .

.

The hyperbolic functions like  , their inverses like

, their inverses like  or

or  are rarely used, but we find them on the calculator and in the math book, so we should have them in the standard floating point library. There is only one flavor of them.

are rarely used, but we find them on the calculator and in the math book, so we should have them in the standard floating point library. There is only one flavor of them.

Logarithms and exponential functions are found in two flavors on calculators:  and

and  and

and  and

and  . The log is kind of confusing, because in mathematics and physics and in most current programming language we mean

. The log is kind of confusing, because in mathematics and physics and in most current programming language we mean  (natural logarithm). This is just a wrong naming on calculators, even if they all did the same mistake across all vendors and probably still do in the scientific calculator app on the phone or on the desktop. As IT people we tend to like the base two logarithm

(natural logarithm). This is just a wrong naming on calculators, even if they all did the same mistake across all vendors and probably still do in the scientific calculator app on the phone or on the desktop. As IT people we tend to like the base two logarithm  , so I would tend to add that to the list. Just to make the confusion complete, in some informatics text books and lectures the term „

, so I would tend to add that to the list. Just to make the confusion complete, in some informatics text books and lectures the term „ “ refers to the base two logarithm. It is a bad habit and at least the laziness should favor writing the correct „

“ refers to the base two logarithm. It is a bad habit and at least the laziness should favor writing the correct „ „.

„.

Then we usually have power functions  , which surprisingly many programming languages do not have. If they do, it is usually written as

, which surprisingly many programming languages do not have. If they do, it is usually written as x ** y or pow(x, y), square root, square and maybe cube root and cube. Even though the square root and the cube root can be expressed as powers using  and

and =x^\frac{1}{3}](https://brodowsky.it-sky.net/wp-content/ql-cache/quicklatex.com-61e0478334379ad6a0575c199b4dcb99_l3.svg) it is better to do them as dedicated functions, because they are used much more frequently than any other power with non-integral exponents and it is possible to write optimized implementations that run faster and more reliably then the generic power which usually needs to go via log and exp. Internal optimization of power functions is usually a good idea for integral exponents and can easily be achieved, at least if the exponent is actually of an integer type.

it is better to do them as dedicated functions, because they are used much more frequently than any other power with non-integral exponents and it is possible to write optimized implementations that run faster and more reliably then the generic power which usually needs to go via log and exp. Internal optimization of power functions is usually a good idea for integral exponents and can easily be achieved, at least if the exponent is actually of an integer type.

Factorial and binomial coefficient are usually used for integers, which is not part of this discussion. Extensions for floating point numbers can be defined, but they are beyond the scope of advanced school mathematics and of common scientific calculators. I do not think that they are needed in a standard floating point library. It is of its own interest what could be in an „advanced math library“, but  and

and  and

and  for sure belong into the base math library.

for sure belong into the base math library.

That’s it. It would be easy to add all these into the standard library of any programming language that does floating point arithmetic at all and it would be helpful for those who work with this and not hurt at all those who do not use it, because this stuff is really small compared to most of our libraries. So this would be the list

- sin, cos, tan, cot, sec, csc in two flavors

- asin, acos, atan, acot, asec, acsc (standing for

…) in two flavors

…) in two flavors

- p2r, r2p (polar coordinates to rectangular and reverse) or atan2

- sinh, cosh, tanh, coth, sech, csch

- asinh, acosh, atanh, acoth, asech, acsch (for

…)

…)

- exp, log (for

and logarithm base e)

and logarithm base e)

- exp10, exp2, log10, log2 (base 10 and base 2, I would not rely on knowledge that ld and lg stand for log2 and log10, respectively, but name them like this)

- sqrt, cbrt (for

and

and ![Rendered by QuickLaTeX.com \sqrt[3]{x}](https://brodowsky.it-sky.net/wp-content/ql-cache/quicklatex.com-cade19ad1ffaa736832e7865abe1953a_l3.svg) )

)

- ** or pow with double exponent

- ** or pow with integer exponent (maybe the function with double exponent is sufficient)

,

,  ,

,  ,

,  are maybe actually not needed, because we can just write them using ** and /

are maybe actually not needed, because we can just write them using ** and /

Actually pretty much every standard library contains sin, cos, tan, atan, exp, log and sqrt.

Java

Java is actually not so bad in this area. It contains the tan2, sinh, cosh, tanh, asin, acos, atan, log10 and cbrt functions, beyond what any library contains. And it contains conversions from degree to radiens and vice versa. And as you can see here in the source code of pow, the calculations are actually quite sophisticated and done in C. It seems to be inspired by GNU-classpath, which did a similar implementation in Java. It is typical that a function that has a uniform mathematical definition gets very complicated internally with many cases, because depending on the parameters different ways of calculation provide the best precision. It would be quite possible that this function is so good that calling it with an integer as a second parameter, which is then converted to a double, would actually be good enough and leave no need for a specific function with an integer exponent. I would tend to assume that that is the case.

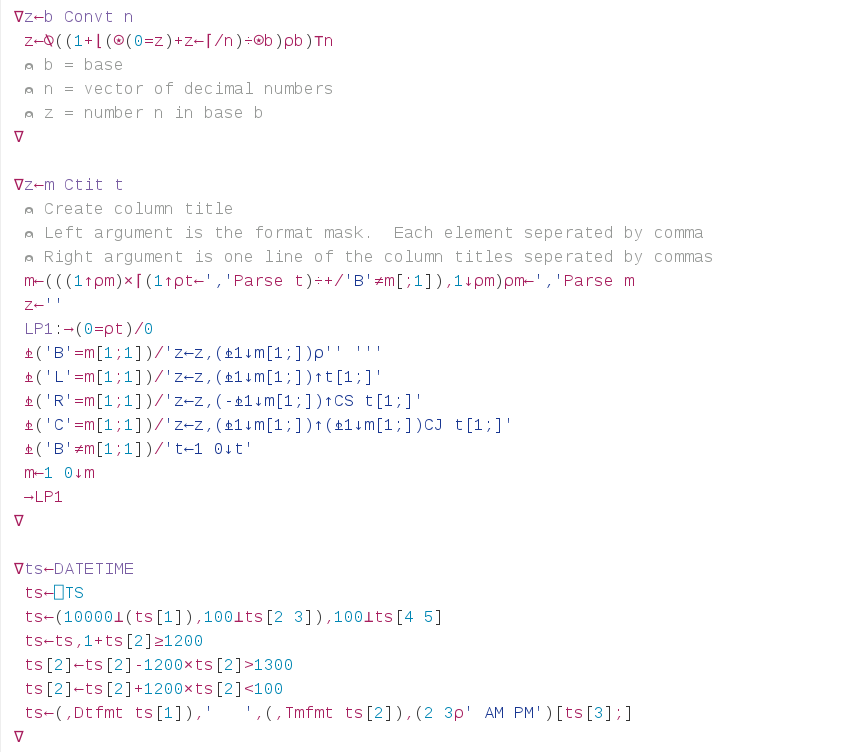

In this github project we can see what a library could look like that completes the list above, includes unit tests and works also for the edge cases, which ad hoc solution often do not. What could be improved is providing the optimal possible precision for any legitimate parameters, which I would see as an area of further investigation and improvement. The general idea is applicable to almost any programming language.

Two areas that have been known for a great need of such additional libraries are collections and Date&Time. I would say that really a lot what I would wish from a decent collection library has been addressed by Guava. Getting Date and time right is surprisingly hard, but just thing of the year-2000-problem to see the significance of this issue. I would say Java had this one messed up, but Joda Time was a good solution and has made it into the standard distribution of Java 8.

Summary

This may serve as an example. There are usually some functions missing for collections, strings, dates, integers etc. I might write about them as well, but they are less obvious, so I would like to collect some input before writing about that.

libc on Linux seem to contain sin, cos, tan, asin, acos, atan, atan2, sinh, cosh, tanh, asinh, acosh, atanh, sqrt, cbrt, log10, log2, exp, log, exp10, exp2. Surprisingly Java does not make use of these functions, but comes up with its own.

Actually a lot of functionality is already in the CPU-hardware. IEEE-recommendations suggest quite an impressive set of functions, but they are all optional and sometimes the accuracy is poor.

But standard libraries should be slightly more complete and ideally there would be no need to write a „generic“ util-library. Such libraries should only be needed for application specific code that is somewhat generic across some projects of the organization or when doing a real demanding application that needs more powerful functionality than can easily be provided in the standard library. Ideally these can be donated to the developers of the standard library and included in future releases, if they are generic enough. We should not forget, even programming languages that are main stream and used by thousands of developers all over the world are usually maintained by quite small teams, sometimes only working part time on this. But usually it is hard to get even a good improvement into their code base for an outsider.

So what functions do you usually miss in the standard libraries?

or

: